7.0 KiB

layout, title, description, category, tags

| layout | title | description | category | tags |

|---|---|---|---|---|

| post | A Case Study in Heaptrack | ...because you don't need no garbage collection |

One of my first conversations about programming went like this:

Programmers have it too easy these days. They should learn to develop in low memory environments and be efficient.

-- My Father (paraphrased)

Though it's not like the first code I wrote was for a graphing calculator, packing a whole 24KB of RAM. By the way, what are you doing on my lawn?

The principle remains though: be efficient with the resources you're given, because what Intel giveth, Microsoft taketh away. My professional work has been focused on this kind of efficiency; low-latency financial markets demand that you understand at a deep level exactly what your code is doing. As I've been experimenting with Rust for personal projects, it's exciting to bring that mindset with me. There's flexibility for the times where I'd rather have a garbage collector, and flexibility for the times that I really care about efficiency.

This post is a (small) case study in how I went from the former to the latter. And it's an attempt to prove how easy it is for you to do the same.

The Starting Line

When I first started building the dtparse crate, my intention was to mirror as closely as possible

the equivalent Python library. Python, as you may know, is garbage collected. Very rarely is memory

usage considered in Python, and I likewise wasn't paying too much attention when dtparse was first being built.

That works out well enough, and I'm not planning on making that crate hyper-efficient. But every so often I've wondered: "what exactly is going on in memory?" With the advent of Rust 1.28 and the Global Allocator trait, I had a really great idea: build a custom allocator that allows you to track your own allocations. That way, you can do things like writing tests for both correct results and correct memory usage. I gave it a shot, but learned very quickly: never write your own allocator. It went from "fun weekend project" into "I have literally no idea what my computer is doing" at breakneck speed.

Instead, let's highlight another (easier) way you can make sense of your memory usage: heaptrack

Turning on the System Allocator

This is the hardest part of the post. Because Rust uses

its own allocator by default,

heaptrack is unable to properly record what your code is doing out of the box. Instead,

we compile our programs with some special options to make it work.

Specifically, in lib.rs or main.rs, make sure you add this:

use std::alloc::System;

#[global_allocator]

static GLOBAL: System = System;

Or look here for another example.

Running heaptrack

Assuming you've installed heaptrack (Homebrew in Mac, package manager in Linux, ??? in Windows), all that's left is to fire it up:

heaptrack my_application

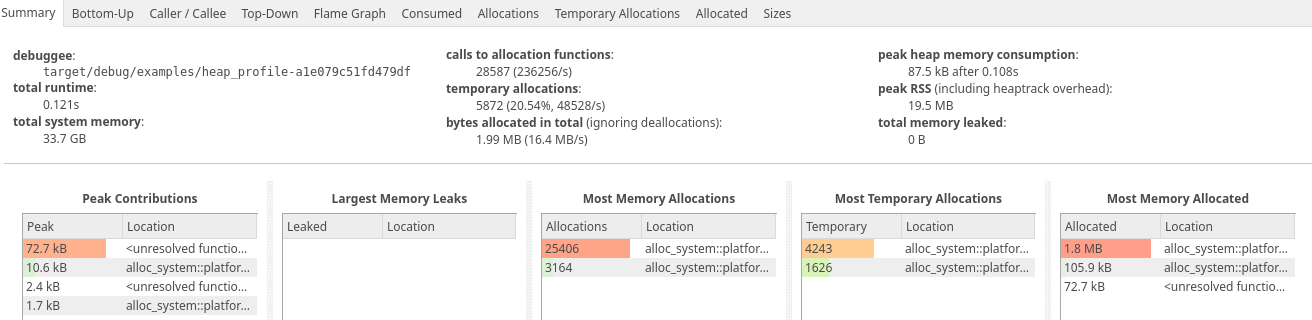

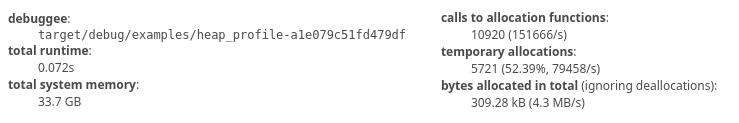

It's that easy. After the program finishes, you'll see a file in your local directory with a name

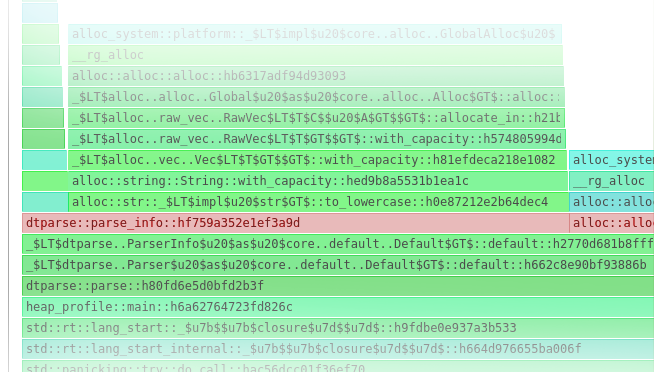

like heaptrack.my_appplication.XXXX.gz. If you load that up in heaptrack_gui, you'll see

something like this:

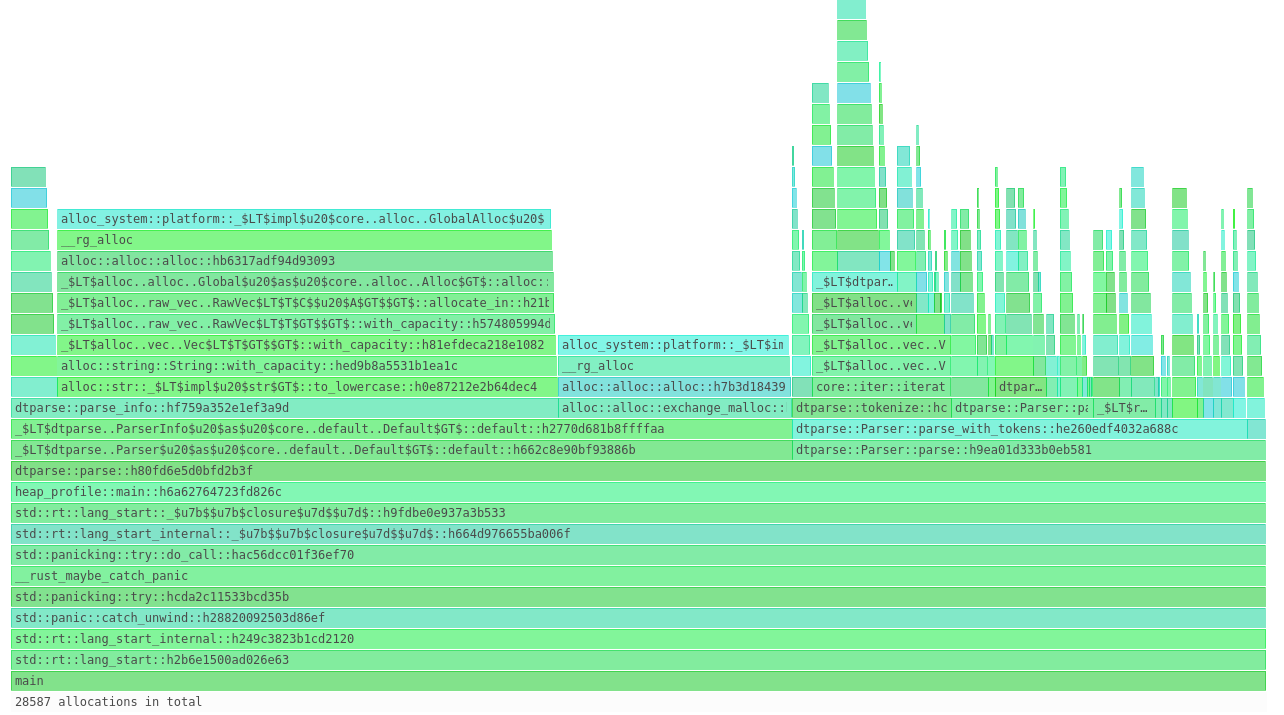

And even these pretty colors:

Reading Flamegraphs

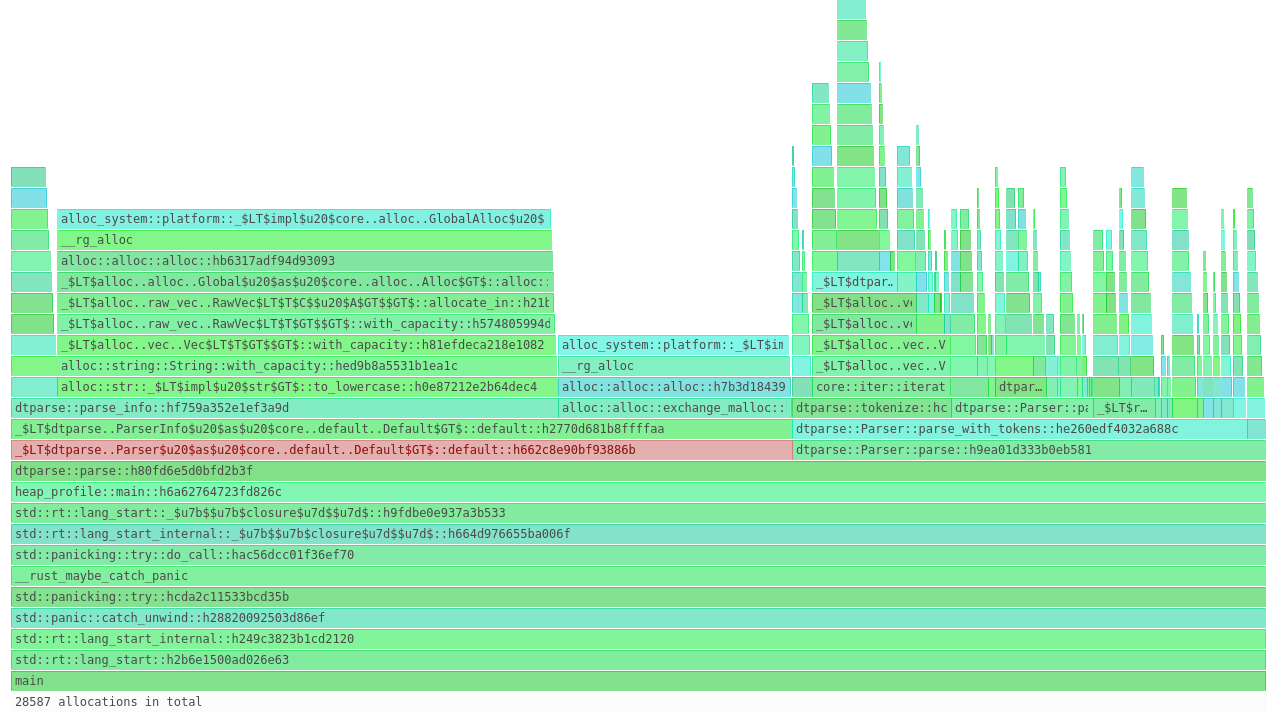

We're going to focus on the heap "flamegraph", which is the last picture I showed above. Normally these charts are used to show how much time you spend executing different functions, but the focus for now is to show how much memory was allocated during those functions.

As a quick introduction to reading flamegraphs, the idea is this: The width of the bar is how much memory was allocated by that function, and all functions that it calls.

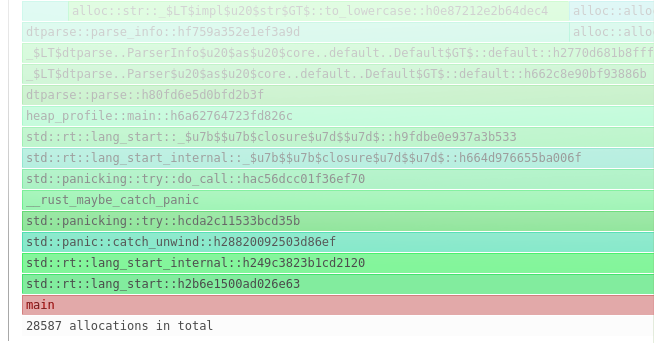

For example, we can see that all executions happened during the main function:

...and within that, all allocations happened during dtparse::parse:

...and within that, allocations happened in two main places:

Now I apologize that it's hard to see, but there's one area specifically that stuck out

as an issue: what the heck is the Default thing doing?

Optimizing dtparse

See, I knew that there were some allocations that happen during the dtparse::parse method,

but I was totally wrong about where the bulk of allocations occurred in my program.

Let me post the code and see if you can spot the mistake:

/// Main entry point for using `dtparse`.

pub fn parse(timestr: &str) -> ParseResult<(NaiveDateTime, Option<FixedOffset>)> {

let res = Parser::default().parse(

timestr, None, None, false, false,

None, false,

&HashMap::new(),

)?;

Ok((res.0, res.1))

}

The issue is that I keep on creating a new Parser every time you call the parse() function!

Now this is a bit excessive, but was necessary at the time because Parser.parse() used &mut self.

In order to properly parse a string, the parser itself required mutable state.

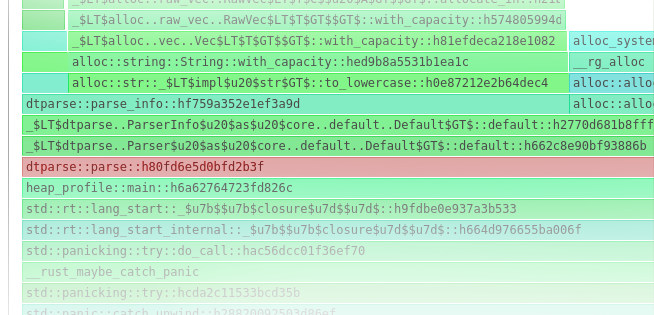

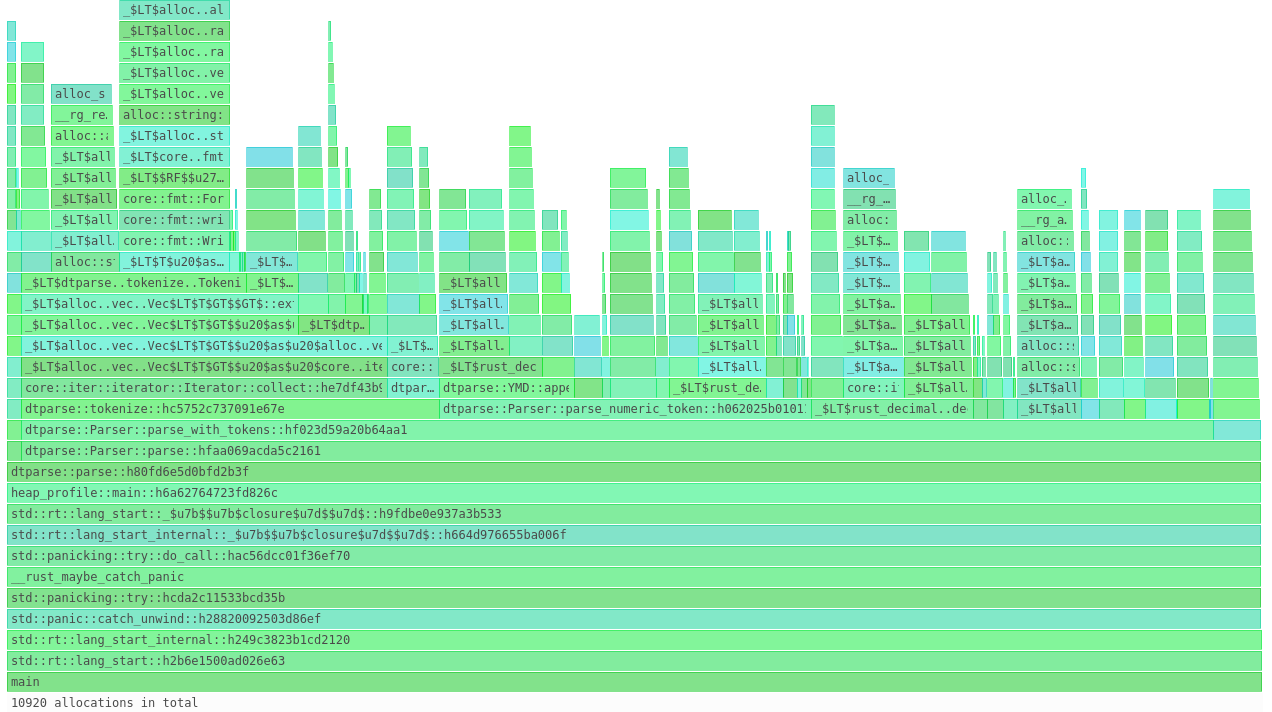

Armed with that information, I put some time in to make the parser immutable. Now I can re-use the same parser over and over! And would you believe it? No more allocations of default parsers:

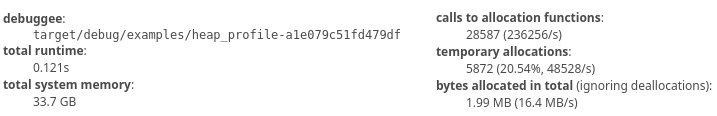

In total, we went from requiring 2 MB of memory:

All the way down to 300KB:

Conclusion

In the end, you don't need to write a custom allocator to test memory performance. Rather, there are some great tools that already exist you can put to work!

Use them.

Now that Moore's Law is dead, we've all got to do our part to take back what Microsoft stole.