a member of the iterated function system class of fractals

It's tedious, but technically correct. I choose to think of them a different way: beauty in mathematics.

I don't remember when exactly I first learned about fractal flames, but I do remember being entranced by the images they created. I also remember their unique appeal to my young engineering mind; this was an art form I could participate in.

The Fractal Flame Algorithm paper describing their structure was too much for me to handle at the time (I was ~12 years old), so I was content to play around and enjoy the pictures. But the desire to understand it stuck around. Now, with a graduate degree under my belt, I wanted to revisit it.

This guide is my attempt to explain how fractal flames work so that younger me — and others interested in the art — can understand without too much prior knowledge.

Iterated function systems

This post covers section 2 of the Fractal Flame Algorithm paper

As mentioned, fractal flames are a type of "iterated function system," or IFS. The formula for an IFS is short, but takes some time to work through:

Solution set

First, . is the set of points in two dimensions (in math terms, ) that represent a "solution" of some kind to our equation. Our goal is to find all the points in , plot them, and display that image.

For example, if we say , there are three points to plot:

With fractal flames, rather than listing individual points, we use functions to describe the solution. This means there are an infinite number of points, but if we find enough points to plot, we get a nice picture. And if the functions change, the solution also changes, and we get something new.

Transform functions

Second, the functions, also known as "transforms." Each transform takes in a 2-dimensional point and gives a new point back (in math terms, ). While you could theoretically use any function, we'll focus on a specific kind of function called an "affine transformation." Every transform uses the same formula:

export type Transform =

(x: number, y: number) =>

[number, number];

export interface Coefs {

a: number,

b: number,

c: number,

d: number,

e: number,

f: number

}

export function applyCoefs(

x: number,

y: number,

coefs: Coefs

): [number, number] {

return [

(x * coefs.a + y * coefs.b + coefs.c),

(x * coefs.d + y * coefs.e + coefs.f)

];

}

The parameters (, , etc.) are values we choose. For example, we can define a "shift" function like this:

Applying this transform to the original points gives us a new set of points:

Fractal flames use more complex functions, but they all start with this structure.

Fixed set

With those definitions in place, let's revisit the initial problem:

Or, in English, we might say:

Our solution, , is the union of all sets produced by applying each function, , to points in the solution.

There's just one small problem: to find the solution, we must already know which points are in the solution. What?

John E. Hutchinson provides an explanation in the original paper defining the mathematics of iterated function systems:

Furthermore, is compact and is the closure of the set of fixed points of finite compositions of members of .

Before your eyes glaze over, let's unpack this:

- Furthermore, is compact...: All points in our solution will be in a finite range

- ...and is the closure of the set of fixed points: Applying our functions to points in the solution will give us other points that are in the solution

- ...of finite compositions of members of : By composing our functions (that is, using the output of one function as input to the next), we will arrive at the points in the solution

Thus, by applying the functions to fixed points of our system, we will find the other points we care about.

If you want a bit more math...

...then there are some extra details I've glossed over so far.

First, the Hutchinson paper requires that the functions be contractive for the solution set to exist. That is, applying the function to a point must bring it closer to other points. However, as the fractal flame algorithm demonstrates, we only need functions to be contractive on average. At worst, the system will degenerate and produce a bad image.

Second, we're focused on because we're generating images, but the math allows for arbitrary dimensions; you could also have 3-dimensional fractal flames.

Finally, there's a close relationship between fractal flames and attractors. Specifically, the fixed points of act as attractors for the chaos game (explained below).

This is still a bit vague, so let's work through an example.

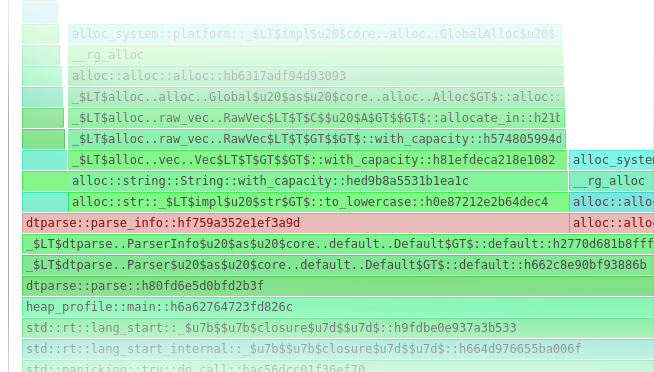

Sierpinski's gasket

The Fractal Flame paper gives three functions to use for a first IFS:

The chaos game

Now, how do we find the "fixed points" mentioned earlier? The paper lays out an algorithm called the "chaos game" that gives us points in the solution:

The chaos game algorithm is effectively the "finite compositions of " mentioned earlier.

Let's turn this into code, one piece at a time.

To start, we need to generate some random numbers. The "bi-unit square" is the range , and we can do this using an existing API:

export function randomBiUnit() {

return Math.random() * 2 - 1;

}

Next, we need to choose a random integer from to :

export function randomInteger(

min: number,

max: number

) {

let v = Math.random() * (max - min);

return Math.floor(v) + min;

}

Plotting

Finally, implementing the plot function. This blog series is interactive,

so everything displays directly in the browser. As an alternative,

software like flam3 and Apophysis can "plot" by saving an image to disk.

To see the results, we'll use the Canvas API. This allows us to manipulate individual pixels in an image and show it on screen.

First, we need to convert from fractal flame coordinates to pixel coordinates. To simplify things, we'll assume that we're plotting a square image with range for both and :

export function camera(

x: number,

y: number,

size: number

): [number, number] {

return [

Math.floor(x * size),

Math.floor(y * size)

];

}

Next, we'll store the pixel data in an ImageData object.

Each pixel on screen has a corresponding index in the data array.

To plot a point, we set that pixel to be black:

import { camera } from "./cameraGasket";

function imageIndex(

x: number,

y: number,

width: number

) {

return y * (width * 4) + x * 4;

}

export function plot(

x: number,

y: number,

img: ImageData

) {

let [pixelX, pixelY] =

camera(x, y, img.width);

// Skip coordinates outside the display

if (

pixelX < 0 ||

pixelX >= img.width ||

pixelY < 0 ||

pixelY >= img.height

)

return;

const i = imageIndex(

pixelX,

pixelY,

img.width

);

// Set the pixel to black by setting

// the first three elements to 0

// (red, green, and blue, respectively),

// and 255 to the last element (alpha)

img.data[i] = 0;

img.data[i + 1] = 0;

img.data[i + 2] = 0;

img.data[i + 3] = 0xff;

}

Putting it all together, we have our first image:

// Hint: try changing the iteration count const iterations = 100000; // Hint: negating `x` and `y` creates some cool images const xforms = [ (x, y) => [x / 2, y / 2], (x, y) => [(x + 1) / 2, y / 2], (x, y) => [x / 2, (y + 1) / 2] ]; function* chaosGame({ width, height }) { let img = new ImageData(width, height); let [x, y] = [ randomBiUnit(), randomBiUnit() ]; for (let i = 0; i < iterations; i++) { const index = randomInteger(0, xforms.length); [x, y] = xforms[index](x, y); if (i > 20) plot(x, y, img); if (i % 1000 === 0) yield img; } yield img; } render(<Gasket f={chaosGame} />);

The image here is slightly different than in the paper. I think the paper has an error, so I'm plotting the image like the reference implementation.

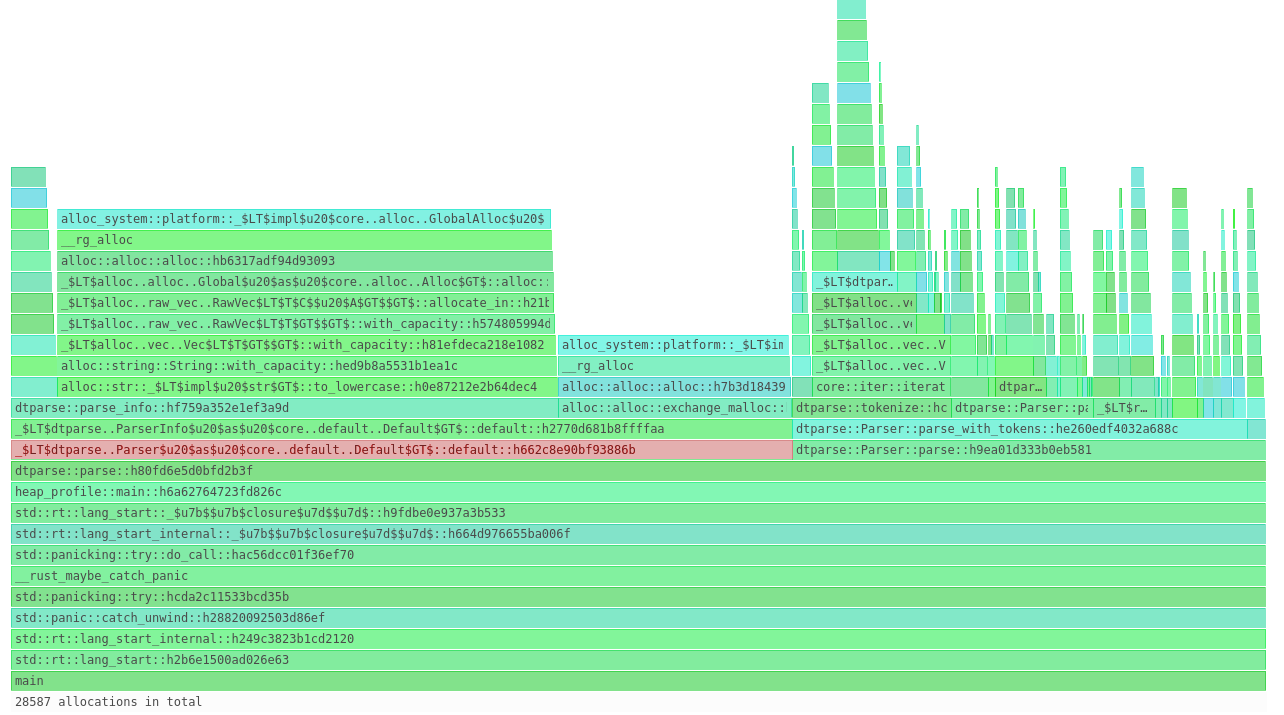

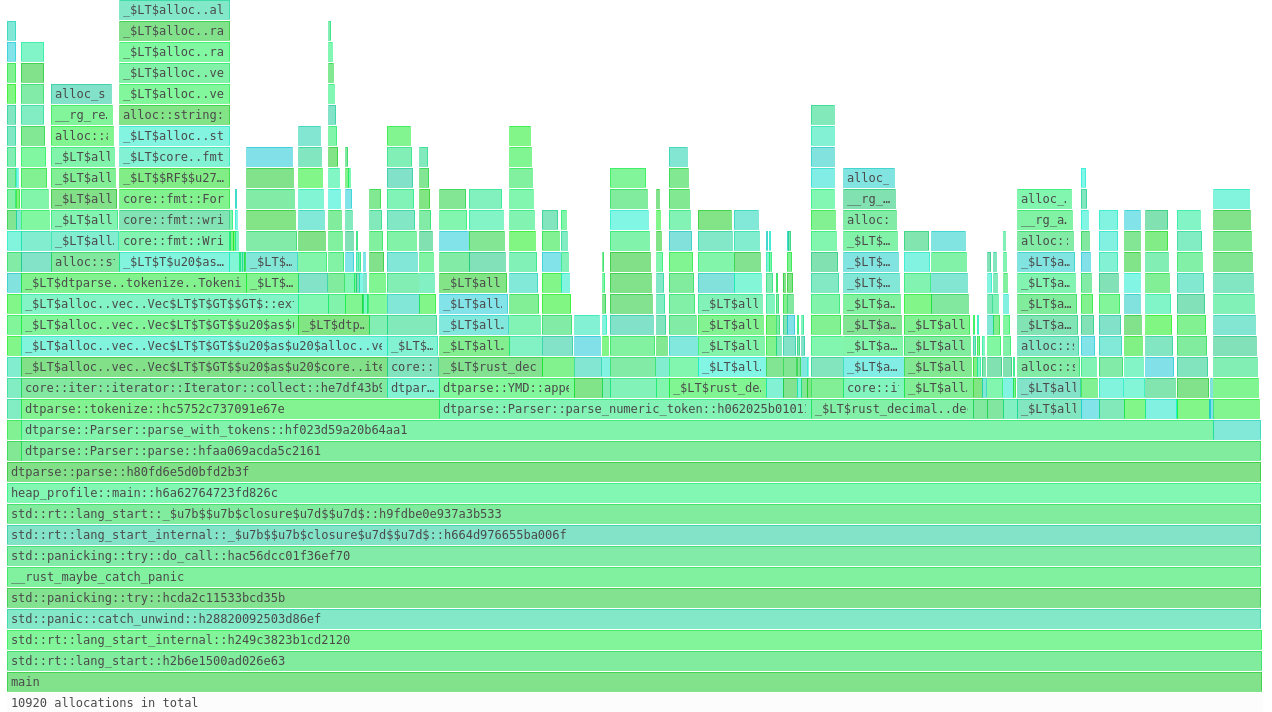

Weights

There's one last step before we finish the introduction. So far, each transform has the same chance of being picked in the chaos game. We can change that by giving them a "weight" () instead:

export function randomChoice<T>(

choices: [number, T][]

): [number, T] {

const weightSum = choices.reduce(

(sum, [weight, _]) => sum + weight,

0

);

let choice = Math.random() * weightSum;

for (const entry of choices.entries()) {

const [idx, elem] = entry;

const [weight, t] = elem;

if (choice < weight) {

return [idx, t];

}

choice -= weight;

}

const index = choices.length - 1;

return [index, choices[index][1]];

}

If we let the chaos game run forever, these weights wouldn't matter. But because the iteration count is limited, changing the weights means we don't plot some parts of the image:

import { randomBiUnit } from "../src/randomBiUnit";

import { randomChoice } from "../src/randomChoice";

import { plot } from "./plot";

import { Transform } from "../src/transform";

const quality = 0.5;

const step = 1000;

export type Props = {

width: number,

height: number,

transforms: [number, Transform][]

}

export function* chaosGameWeighted(

{ width, height, transforms }: Props

) {

let img =

new ImageData(width, height);

let [x, y] = [

randomBiUnit(),

randomBiUnit()

];

const pixels = width * height;

const iterations = quality * pixels;

for (let i = 0; i < iterations; i++) {

const [_, xform] =

randomChoice(transforms);

[x, y] = xform(x, y);

if (i > 20)

plot(x, y, img);

if (i % step === 0)

yield img;

}

yield img;

}

Double-click the image if you want to save a copy!

Summary

Studying the foundations of fractal flames is challenging, but we now have an understanding of the mathematics and the implementation of iterated function systems.

In the next post, we'll look at the first innovation of fractal flame algorithm: variations.

]]>